安装依赖软件包

sudo yum install libtool automake gettext libblkid-devel libuuid-devel

获取xfs最新软件包

wget https://www.kernel.org/pub/linux/utils/fs/xfs/xfsprogs/xfsprogs-4.5.0.tar.gz

安装

1 | tar zxvf xfsprogs-4.5.0.tar.gz |

查看xfs版本

1 | mkfs.xfs -V |

sudo yum install libtool automake gettext libblkid-devel libuuid-devel

wget https://www.kernel.org/pub/linux/utils/fs/xfs/xfsprogs/xfsprogs-4.5.0.tar.gz

1 | tar zxvf xfsprogs-4.5.0.tar.gz |

1 | mkfs.xfs -V |

1 | $ sudo rpm -ivh https://repo.zabbix.com/zabbix/5.4/rhel/7/x86_64/zabbix-release-5.4-1.el7.noarch.rpm |

新建 zabbix Mysql 数据库及专用账号

1 | $ mysql |

1 | mysql > CREATE DATABASE zabbix character set utf8 collate utf8_bin; |

默认情况下,执行下述命令就可以;

1 | # zcat /usr/share/doc/zabbix-server-mysql*/create.sql.gz | mysql -uzabbix -p zabbix |

但是我好想没有找到这个压缩文件,所以执行下面的命令:

1 | $ cd /usr/share/zabbix-mysql/ |

1 | $ vim /etc/zabbix/zabbix_server.conf |

1 | $ service zabbix-server start |

重启一下Httpd 服务后,进入 http://zabbix-server-ip/zabbix 即可web配置,

初始登录信息为Admin/zabbix

安装Zabbix Agent

1 | yum install zabbix-agent |

编辑Zabbix Agent 配置文件

1 | vim /etc/zabbix/zabbix_agentd.conf |

重启Zabbix Agent

1 | service zabbix-agent restart |

checked and found that the ServerActive address is not changed to zabbix-server IP address in configuration file. So we changed it to Zabbix-server IP.

We made the changes in:

1 | /etc/zabbix/zabbix_agentd.conf |

and restarted the service using:

1 | $ sudo service zabbix-agent2 start |

This fixed the issue.

It appears as signed on grafana’s plugin panel, but /etc/grafana/grafana.ini needs editing.

…

[plugins]

allow_loading_unsigned_plugins = alexanderzobnin-zabbix-datasource

可以检查日志tailf /var/log/zabbix/zabbix_server.log

首先还是看一下防火墙等是不是阻断.

然后确认数据库的最大连接数.默认的是256.

1.vim /etc/my.cnf

[mysqld]

max_connections=需要添加的最大连接数

2.vim /etc/systemd/system.conf

DefaultLimitNOFILE=65535

DefaultLimitNPROC=65535

3.重启

systemctl daemon-reload

systemctl restart mysqld.service

查看最大连接数

mysql>show variables like ‘max_connections’;

查看当前连接数

show status like ‘%thread%’;

lscpu用于显示CPU的相关信息,该命令主要从sysfs和/proc/cpuinfo收集CPU体系结构信息

1 | $ lscpu |

可以加上参数-p来获取比较容易解析的输出

1 | $ lscpu -p |

1 | # 总核数 = 物理CPU个数 X 每颗物理CPU的核数 |

如果此时的Mem并没有用很多的话,可能需要执行下面的命令来强制关闭:

1 | $ killall -9 kswapd |

Linux sudo命令以系统管理者的身份执行指令,也就是说,经由 sudo 所执行的指令就好像是 root 亲自执行。

如果希望可以执行这个命令,需要管理员在文件 /etc/sudoers 中增加权限即可。

官方的定义为:

execute a command as another user

1 | $ sudo [ option ] command |

参数说明:

-l 或--list:显示出自己(执行 sudo 的使用者)的权限-k 将会强迫使用者在下一次执行 sudo 时问密码(不论有没有超过 N 分钟)-b 将要执行的指令放在后台执行-p prompt 可以更改问密码的提示语,其中 %u 会代换为使用者的帐号名称, %h 会显示主机名称-u username/uid 不加此参数,代表要以 root 的身份执行指令,而加了此参数,可以以 username 的身份执行指令(uid 为该 username 的使用者号码)-s 执行环境变数中的 SHELL 所指定的 shell ,或是 /etc/passwd 里所指定的 shell-H 将环境变数中的 HOME (家目录)指定为要变更身份的使用者家目录(如不加 -u 参数就是系统管理者 root )command 要以系统管理者身份(或以 -u 更改为其他人)执行的指令如果没有sudo权限,在执行命令的时候还有下面👇的输出:

1 | $ sudo ls |

这个的应用场景为,其他用户在登录,而你具有sudo权限,测试可以通过指定用户名来操作。

1 | $ sudo -u username ls -l |

如果不清楚,可以执行那些命令,可以通过参数-l来查看,主要看在sudoer里面的修改。

1 | $ sudo -l |

以root权限执行上一条命令

1 | $ sudo sh -c "cd /home ; du -s * | sort -rn " |

Linux sudo命令以系统管理者的身份执行指令,也就是说,经由 sudo 所执行的指令就好像是 root 亲自执行。

如果希望可以执行这个命令,需要管理员在文件 /etc/sudoers 中增加权限即可。

官方的定义为:

execute a command as another user

1 | $ sudo [ option ] command |

参数说明:

-l 或--list:显示出自己(执行 sudo 的使用者)的权限-k 将会强迫使用者在下一次执行 sudo 时问密码(不论有没有超过 N 分钟)-b 将要执行的指令放在后台执行-p prompt 可以更改问密码的提示语,其中 %u 会代换为使用者的帐号名称, %h 会显示主机名称-u username/uid 不加此参数,代表要以 root 的身份执行指令,而加了此参数,可以以 username 的身份执行指令(uid 为该 username 的使用者号码)-s 执行环境变数中的 SHELL 所指定的 shell ,或是 /etc/passwd 里所指定的 shell-H 将环境变数中的 HOME (家目录)指定为要变更身份的使用者家目录(如不加 -u 参数就是系统管理者 root )command 要以系统管理者身份(或以 -u 更改为其他人)执行的指令如果没有sudo权限,在执行命令的时候还有下面👇的输出:

1 | $ sudo ls |

这个的应用场景为,其他用户在登录,而你具有sudo权限,测试可以通过制定用户名来操作。

1 | $ sudo -u username ls -l |

如果不清楚,可以执行那些命令,可以通过参数-l来查看,主要看在sudoer里面的修改。

1 | $ sudo -l |

以root权限执行上一条命令

1 | $ sudo sh -c "cd /home ; du -s * | sort -rn " |

第一种方法:在终端输入两个感叹号,然后回车就可以快速地执行上一条命令了。

1 | $ !! |

1 | chmod g+r path/file 加读权限 当前目录 |

简单使用,将文本test.txt所属组改为gourp1

1 | chgrp gourp1 test.txt |

2.chown修改文件拥有者

##将test.txt文件所属用户修改为user1

1 | chown user1 test.txt |

##同时修改test.txt的所属用户和所属组

1 | chown user1:group1 test.txt |

3.chmod修改文件属性

1 | chmod 755 test |

4.usrmod修改用户所属组

一般的话只是将当前用户添加到其它组中去

1 | usrmod -a -G group1 user1 |

如果要彻底更改用户所属的组的话使用

1 | usrmod -g group1 user1 |

使用Linux时,需要以一个用户的身份登陆,从而限制一个使用者能够访问的资源;而组则是用来方便组织管理用户。

•每个用户拥有一个UserID

•每个用户属于一个主组,属于一个或多个附属组

•每个组拥有一个GroupID

•每个进程以一个用户身份运行,并受该用户可访问的资源限制

•每个可登陆用户有一个指定的SHELL

系统中的文件都有一个所属用户及所属组,用户、组信息保存在以下三个文件中:

1 | /etc/passwd 用户信息 |

命令id用以显示当前用户的信息,命令passwd可以修改当前用户的密码;以下命令可以显示登陆用户信息:

1 | whoami 显示当前用户 |

命令usermod修改一个用户的信息:

usermod 参数 用户名

-l 修改用户名

-u 修改uid

-g 修改用户主组

-G 修改用户附属组

-L 锁定用户

-U 解锁用户

命令userdel用户删除一个用户:

userdel 用户名

userdel -l 用户名 删除用户的同时删除该用户家目录

命令groupadd、groupmod用以创建、修改一个组:

groupadd 组名

groupmod -n 新组名 旧组名

groupmod -g 新组ID 旧组ID

例如:

此命令删除用户sam在系统文件(主要是/etc/passwd,/etc/shadow,/etc/group等)中的记录,同时删除用户的主目录。

删除一个组

同样的,我们有时会需要删除一个组,命令groupde用以删除一个组

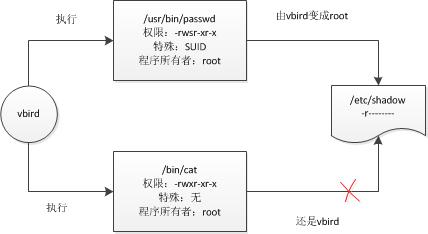

当s这个标志出现在 文件所有者的x权限上时,例如文件权限状态“-rwsr-xr-x”,此时就称为 Set UID,简称为SUID的特殊权限。SUID有这样的限制和功能:

(1)SUID权限仅对 二进制程序有效;

(2)执行者对于该程序需要 具有x的可执行权限;

(3)本权限仅在 执行该程序的过程中有效;

(4)执行者将具有该程序所有者的权限。

举个例子,在Linux中,所有账号的密码记录在/etc/shadow这个文件中,并且只有root可以读和强制写入这个文件。那么,如果另一个账号vbird需要修改自己的密码,就需要访问/etc/shadow这个文件,但是上面明明说了只有root能访问/etc/shadow这个文件,是不是矛盾?但事实上,vbird是可以修改/etc/shadow这个文件内的密码的,这就是SUID的功能。

通过上述的功能说明,我们知道,

(1)vbird对于/usr/bin/passwd这个程序具有x权限,表明vbird可以执行passwd;

(2)passwd的所有者为root;

(3)vbird执行passwd的过程中会暂时获得root的权限;

(4)/etc/shadow因此可以被vbird所执行的passwd所修改。

但是vbird如果使用cat去读取/etc/shadow这个文件时,是不能读取的。

(SUID只能用在文件上,不能用在目录)

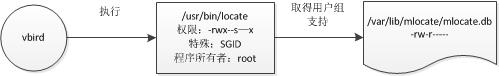

当s标志出现在文件所有者的x权限时称为SUID,那么s出现在用户组的x权限时称为SGID。(U表示user,G表示group)。SGID有如下功能:

(1)SGID对二进制程序有用;

(2)程序执行者对该程序需具备x权限;

(3)执行者在执行过程中会获得该程序用户组的支持。

举个例子,/usr/bin/locate这个程序可以去查询/var/lib/mlocate/mlocate.db这个文件的内容,mlocate.db的权限如下:

-rwx–s–x root slocate /usr/bin/locate

-rw-r—– root slocate /var/lib/mlocate/mlocate.db

若使用vbird这个账号执行locate时,vbird就会获得用户组slocate支持,又由于用户组slocate对mlocate.db具有r权限,所以vbird就可以读取mlocate.db了。

除二进制程序外,SGID也可以用目录上。当一个目录设置了SGID权限后,它具有如下功能:

(1)用户若对此目录具有r和x权限,该用户能够进入该目录;

(2)用户在此目录下的有效用户组将变成该目录的用户组;

(3)若用户在此目录下拥有w权限,则用户所创建的新文件的用户组与该目录的用户组相同。

SBIT目前只对目录有效。

SBIT对目录的作用是:

(1)当用户对此目录具有w和x权限时,即具有写入权限时;

(2)当用户在该目录下创建新文件或目录时,仅有自己和root才有权力删除。

先将其转换成数字:

SUID->4

SGID->2

SBIT->1

假设要将一个文件权限修改为“-rwsr-xr-x”,由于s在用户权限中,所以是SUID,因此,原先的755前面还要加上4,也就是4755,所以,

用命令chmod 4755 filename 设置就可以了。此外,还可能出现S和T的情况。

我们知道,s和t是替代x这个权限的,但是,如果它本身没有x这个权限,修改为s或t时就会变成大S或大T,例如:

执行chmod 7666 filename。 因为666表示“-rw-rw-rw”,均没有x权限,所以最后变成“-rwSrwSrwT”。

在linux下每一个文件和目录都有自己的访问权限,访问权限确定了用户能否访问文件或者目录和怎样进行访问。最为我们熟知的一个文件或目录可能拥有三种权限,分别是读、写、和执行操作。我们创建一个文件后系统会默认地赋予所有者读和写权限。当然我们也可以自己修改它,添加自己需要的权限。

除了通用的这些权限,我们来说说在linux下的另一个特殊权限。首先我们来看看在根目录下的一个目录tmp,可以看到tmp目录的other权限是rwt,那么这里的t又是什么权限呢,有什么意义。

t就是粘滞位(粘着位)

上面所说的t权限就是我们在这里要讲的粘滞位(sticky bit),我们给刚刚的cur目录采用chmod o+t的方式给other用户设置粘滞位。

可以看到此时我们是没有权限删除root用户创建的文件了,这也就是粘滞位的作用。

粘滞位权限便是针对此种情况设置,当⽬录被设置了粘滞位权限以后,即便⽤户对该⽬录有写⼊权限,也不能删除该⽬录中其他⽤户的⽂件数据,⽽是只有该⽂件的所有者和root⽤户才有权将其删除。设置了粘滞位之后,正好可以保持⼀种动态的平衡:允许各⽤户在⽬录中任意写⼊、删除数据,但是禁⽌随意删除其他⽤户的数据。

如果去掉了other的执行权限,可以看到本来’t’的位置变成了’T’,,那么原来的执行标志x到哪里去了呢? 系统是这样规定的, 假如本来在该位上有x, 则这些特别标志 (suid, sgid, sticky) 显示为小写字母 (s, s, t).否则, 显示为大写字母 (S, S, T) 。

粘滞位权限是针对目录的,对文件无效

在系统防火墙中打开HTTP和SSH。

1 | sudo yum install curl policycoreutils openssh-server openssh-clients -y |

1 | curl -sS https://packages.gitlab.com/install/repositories/gitlab/gitlab-ce/script.rpm.sh | sudo bash |

也可以下载软件包然后安装

1 | curl -LJO https://packages.gitlab.com/gitlab/gitlab-ce/packages/el/7/gitlab-ce-XXX.rpm/download |

如果这版本不能下载,可以考虑清华版本,

新建/etc/yum.repos.d/gitlab-ce.repo

1 | [gitlab-ce] |

1 | sudo yum makecache |

1 | sudo gitlab-ctl reconfigure |

此时就可以设置用户名和密码了。

Enjoy!!!

Linux tty命令用于显示终端机连接标准输入设备的文件名称。

在Linux操作系统中,所有外围设备都有其名称与代号,这些名称代号以特殊文件的类型存放于/dev目录下。

比如ttyN就是今天说的设备,而sddN等就是硬盘设备。

你可以执行tty(teletypewriter)指令查询目前使用的终端机的文件名称。

官方定义为:

tty - print the file name of the terminal connected to standard input

使用方法比较简单:

1 | $ tty [-s][--help][--version] |

对于-s选项就是–silent,–quiet,即屏蔽掉输出,仅仅显示一个退出状态。

默认情况下显示当前终端

1 | $ tty |

在Linux里面输入who可以看到目前登陆的用户,而输出信息包括用户名,tty终端,及登陆的时间信息等等。

1 | $ who |

而如前面所说,对于write命令其中有一个参数就是指定ttyN的信息。

.. _linux_bc_beginner:

.. note::

北斗南辰日夜移,飞走鸟和兔。

元·王哲《卜算子·叹世迷》

Linux bc命令是一种支持任意精度的交互执行的命令。

bc也是一种支持交互式执行语句的任意精度数的语言。与C语言有一些相似之处。 标准数学库也可以由 通过命令行选项使用。

官方定义为:

bc- An arbitrary precision calculator language

使用方法为:

1 | $ bc [ -hlwsqv ] [long-options] [ file ... ] |

默认进入交互环境,直接可以执行计算

1 | $ bc |

输入quit即可退出。

简单的情况可以使用管道来实现,如下:

1 | $ echo "3.1415926 * 3" | bc |

可以通过scale来指定一些精度信息,如下可以保留3为有效精度

1 | $ echo "scale=3; 2/3" | bc |

另外还可以使用一些数学函数,比如:

1 | $ echo "sqrt(36)" | bc |

还可以方便地使用ibase进行进制的转换,下面分别是输入为111,对应在2、4、8进制下的输出。

1 | $ echo 'ibase=2;111' | bc |

当然也可以通过obase来指定输入进制,如下将输入的8进制的111,分别转换为2、4、8进制。

1 | $ echo 'ibase=8;obase=2;111' | bc |